Some product sets definitely require more care and feeding than others… that’s all I’ll say in that regard lest I let go with the rant that is on the tip of my tongue. What I’m about to present is in regard to Cisco 3702 access points specifically on 8.5.151.0 code, although I have no doubt the condition applies to many models and code versions.

Problem statement: The freakin’ APs cut and run. They go over the wall, but they are real sneaky about it. They do it in a way that ain’t so easy to detect… Or in Cisco’s own words: “As per FN70330 – IOS AP stranded due to flash corruption issue, due to a number of software bugs an AP in normal operation, the flash file system on some IOS APs may become corrupt over time. This is seen especially after an upgrade is performed to the WLC but not necessarily limited to this scenario. AP may be working fine, servicing client, etc, while on this problem state which is not easily detectable”.

See this Cisco doc as the source of the above statement– and please know that I’m not saying that MY issue is absolutely THIS issue. Although it could be. There are are many fine bugs to choose from.

What it Looks Like, and What it Doesn’t Look Like.

Cisco rightly says that the “problem state is not easily detectable”, and I agree. We’ll focus on a single 3702 AP for this blog, but I know from first-hand conversation that some folks have been bitten by dozens or hundreds of similar free=spirited APs all going for an intent-based spontaneous joyride in the name of innovation.

Prime Infrastructure doesn’t show my AP as being “out”, and I have yet to find any reliable way to show this condition via any other reports in PI. If you ping it, it responds. Look at it in CDP, it’s there. But… all is not well, sir. Not at all, sir. Despite the obvious indicators. This AP that has been up and fine and doing it’s job suddenly got cabin fever:

So… the normal ways of finding out that APs are essentially out of service (like using your expensive NMS) don’t apply in this scenario, and you basically have to stumble upon it, or be alerted when users can’t connect to the AP- which unfortunately is a common canary in the coalmine when dealing with bugs in this particular framework.

Say there- did I mention that the AP never recovers in this situation? It stays in perpetual “Downloading” until you figure out a way to recover it. Value. Buy more licenses… because the one this AP is using is worthless while it’s in this innovative state of self-determinism.

No Resetting Through the Controller UI

It stands to reason that maybe rebooting the AP will get it back to where it needs to be. That’s a pretty common troubleshooting step. But you can’t do it from the controller interface while the AP is trying to go to a happy place that it will never reach.

Allow me to digress…I like to think that when the AP gets to this point, it probably hears Soul Asylum singing Runaway Train in it’s mind…

It seems no one can help me now

I’m in too deep

There’s no way out

This time I have really lead myself astray

Runaway train never going back

Wrong way on a one-way track

Seems like I should be getting somewhere

Somehow I’m neither here nor there

Ahem. Back to topic. (But what a great song.)

Off to the Switch We Go

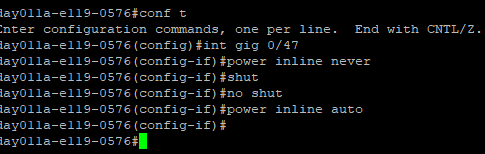

Being that we can’t reboot THE VALUE from the controller interface while the AP is riding the runaway train, we need to visit the switch for command line operations. Basically, we pull the PoE plug via command entry, then restore it (informational note: no innovation licenses are required to enter commands- yet).

If all goes well, a couple of minutes later you’ll have an AP that has atoned for it’s separatist thoughts of independence and freedom, and you can welcome it back to the fleet.

Simple Fix (Maybe)

I’m guessing that you’d agree after reading this that the fix for my situation was fairly easy. I’ve seen maybe 20 of these goofball 3702 instances in the last year, now more reliably found after my office mate found a way to poll them with some degree of success via SNMP using AKIPS.

So… finding them may be harder than fixing them, depending on how you are equipped and IF you are dealing exactly with whatever nuanced issue I happen to have in play. But let me again bring you back to this Cisco doc on the topic of corrupt AP flash. Your situation may end up being a lot messier than mine, given the hoops mentioned in the document.

After WLC code upgrade don’t we verify the AP status in Wireless tab? PI I found not reliable at all. Even alerts are not send timely.

Thanks for reading, Tarun. PI has been rocky to use for sure. Definite gaps in functionality, and often nonsensical values in reports. The thing that really pisses me off about Cisco is the veneer created by their marketing that it’s a Cadillac-grade wireless solution. Gratuitous complexity, endless bugs and a developer’s eye of the world versus the WLAN doer’s real needs detract from what Cisco execs think they are selling people at top dollar.